Ridge Regression

First, we need to understand what bias and variance is?

Bias :

Bias is the simplifying assumption made by the machine learning model to make the learning process easier. It makes the model faster in terms of learning from the training data but at the same time the flexibility of the model decreases if the bias is significantly high.

A model with high bias tends to ignore the complexity between the input and the output data and the model becomes too simple and ultimately results in the underfitting of the data.

Variance:

Variance occurs when the model performs too well on the training data but its efficiency decreases significantly when it works on the testing data.

High variance means that the model learns from training data to that extent that it negatively impacts the performance of the model and results in the overfitting of the data.

Let’s understand about the main topic which is what Ridge Regression really is?

Ridge regression is a technique which is used for analyzing multiple regression where the data suffers from multicollinearity. The problem which arises due to multicollinearity is that the basic linear regression model (least square estimates) becomes unbiased and the variance becomes so large that the predicted values are far from the true value.

The advantage of using the ridge regression is to avoid overfitting. It works in the same way as the linear regression but it just adds an extra term (α) which helps in the reduction of overfitting. The goal of any machine learning model is to generalize the pattern which it needs to be predicted; i.e. the model should work best on both training as well as test data. Overfitting occurs when the trained model performs well on the training data and performs poorly on the testing dataset.

Let’s take an example, suppose we have a dataset having salary of employees based on the years of working experience they have. This data is just for the sake of understanding, but obviously in the real world the dataset will be having thousands and thousands of rows and columns.

YEARS OF EXPERIENCE | SALARY |

1.1 | 39343 |

1.3 | 46205 |

1.5 | 37731 |

2 | 43525 |

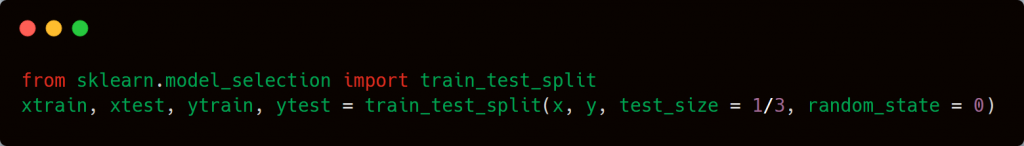

To make the model understand the relationship between the input and the output data, we first split the dataset into training set and test set.

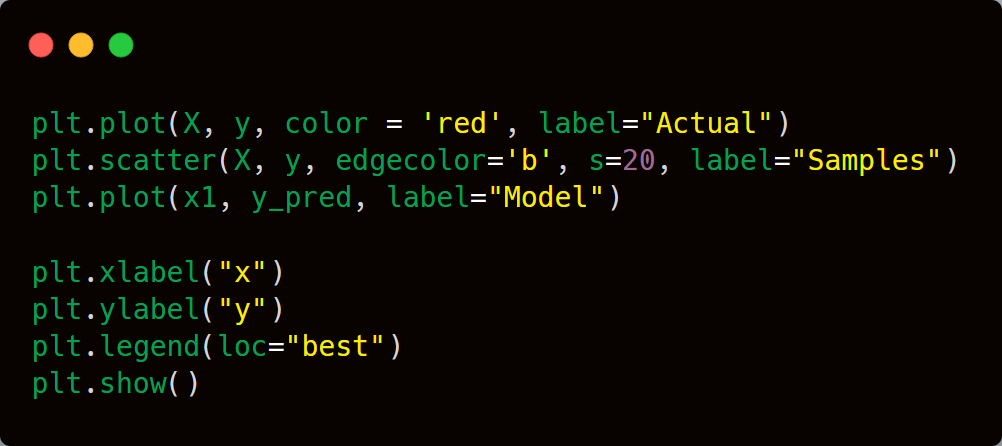

The code for splitting the data is given below.

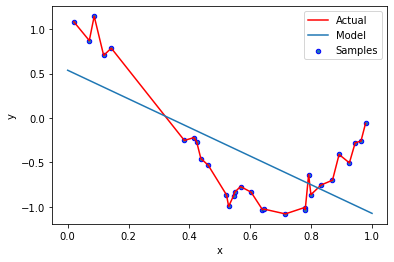

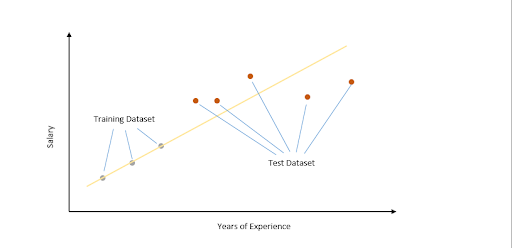

We plotted the data on the graph and the least sum of squares (classic linear regression model) is applied to obtain the best fit line. Since the line passes through the training dataset points, the sum of squared residuals is zero.

However, for the testing dataset, the sum of residual is large so the line has a high variance. Variance means that there is a difference in fit (or variability) between the training dataset and the testing dataset.

This regressing model is overfitting the training dataset.

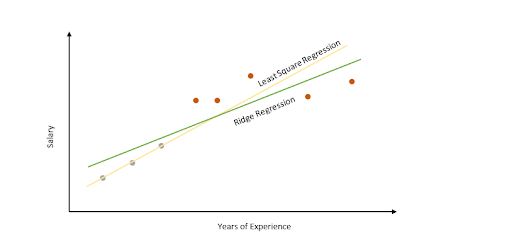

Ridge regression works by increasing the bias to improve variance. This works by changing the slope of the line. The model performance might be little poor on the training set but it will perform consistently well on both the training and testing dataset. Slope has been reduced with ridge regression penalty(α) and therefore the model becomes less sensitive to change in the independent variable (here it is “years of experience” in our example).

Least Square Regression

Min(sum of squared residuals)

Ridge Regression

Min(sum of squared residuals +𝞪*slope2 )

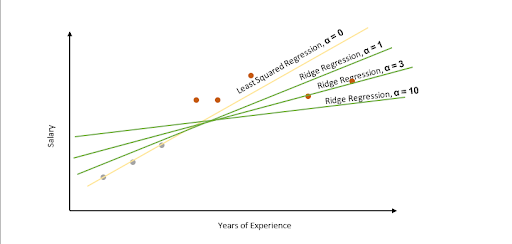

Characteristics of the penalized term (𝞪)

As alpha increases, the slope of the regression line is reduced and becomes more horizontal.

As alpha increases, the model becomes less sensitive to the variation of the independent variable (here it is “years of experience” in our example).

With this we have come to the end of this article. I hope that you get a better understanding of ridge regression. Try to implement it in your future machine learning models and see how it improves the performance of the same model which previously suffered from the problem of overfitting.

Article by: ADIL HUSSAIN

If you are Interested In Machine Learning You Can Check Machine Learning Internship Program

Also Check Other technical and Non Technical Internship Programs