Imagine a prosthetic arm with a human arm’s sensory capacities, along with a robotic ankle that mimics the healthy ankle’s reaction to changing action.

Hollywood has long, popularized imaginative versions of such ideas. While human engineering may not yet have the ability to produce superhero-enabling apparatus, prosthetics are getting”smarter” and more adaptive, approaching a reality where amputees’ artificial appendages offer you near-normal function.

Bioengineers are looking to create”human-machine vents supplied with a prosthetic limb that feel like an extension of their body,” said Robert Armiger, project director for amputee study at Johns Hopkins University’s Applied Physics Lab, where scientists have developed an arm with human-like reflexes and feeling.

The arm has been developed at Johns Hopkins APL has 26 joints and’load cells’ in each fingertip to detect force and the torque applied to every knuckle. Sensors give comments on vibration and temperature and collect it from mimicking what the human arm can identify precisely. It responds to the idea, much like a strong arm.

“Our bodies natively have control loops in various levels, and that is where AI comes into play because there are things that you can build within that arm like reflexes and expecting an item you may be interacting together and letting the user provide the maximum level of control,” Armiger explained.

The system attaches to the user through an osseointegration implant, a little post that goes into the bone and protrudes through the skin. That orientation permits the device to clamp directly onto the skeletal system, giving the consumer a more fundamental understanding of how the limb is shifting. By attaching to the bone, the perception of weight can also be lower than traditional external attachments.

Users also experience the targeted muscle reintervention, which rewires the nerves in the upper arm to twitch when the consumer thinks of transferring their hands. Separate detectors attach to the skin along with the muscles and correspond with the intended movement.

“It’s a great mixture of the robotic arm technology, the cutting-edge surgical processes, and these types of AI calculations that, when those muscles twitch, comprehend and translate that into I wish to wiggle my pinkie finger,” Armiger explained.

The chance lies in maintaining a thing in the hand, such as grabbing a coffee cup and holding it steady so the contents do not spill — these kinds of activities which are sort of autonomously controlled within the body, Armiger added.

The arm is currently at the advanced prototype research phase, and investigators are looking for possible transition partners. Armiger said his staff has also been talking with FDA about which type of acceptance it would require. Still, one of the biggest hurdles will be price.

A Prosthetic Leg with Computer Vision

For an individual who has a lower limb amputation, walking with the most basic prosthetic leg is typically simpler than walking without it. But, walking up stairs or across irregular terrain, having a passive lower-limb prosthetic can be incredibly challenging.

Robotic prosthetics, with powered joints, can help overcome those challenges. At the same time, artificial intelligence (AI) can take artificial limbs one step further, giving them the ability to sense what a wearer is going to do.

Researchers at the University of Michigan introduced an AI-powered prosthetic leg in 2019, which may feel the embryo from the wearer’s muscles to know if they planned to begin walking up stairs or down a ramp.

Now, a team from North Carolina State University has developed a computer vision system that provides a prosthetic leg that the ability to not just”see” what is ahead, but also calculate its degree of certainty in that prediction.

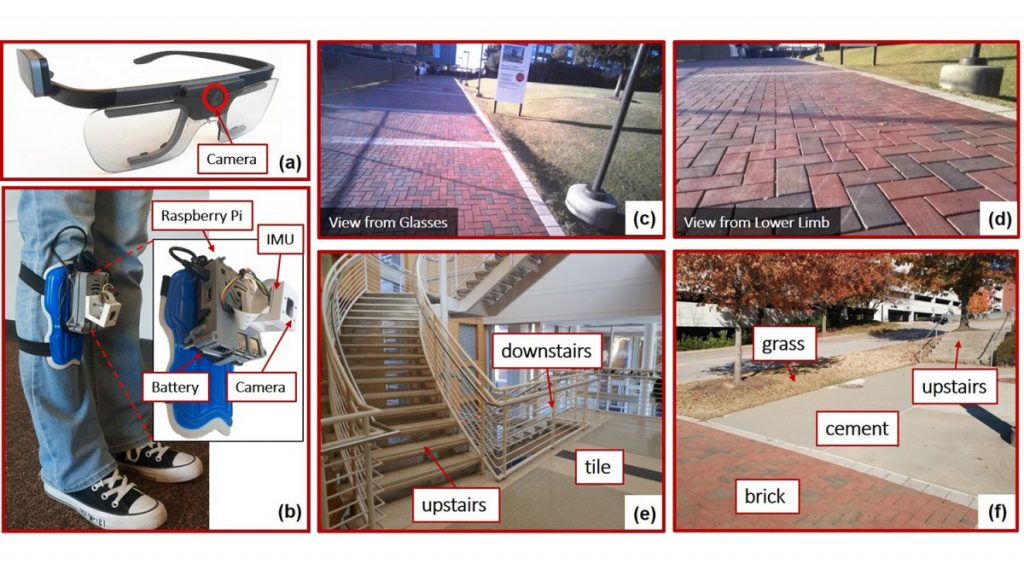

For their analysis, published in the journal IEEE Transactions on Automation Science and Engineering, the NC State researchers educated an AI to observe the difference between six kinds of terrain: Vinyl, brick, concrete, grass, “upstairs,” and”downstairs.”

To train the AI to predict where it was headed, they walked around both inside and outdoors, while wearing cameras mounted on glasses and their legs.

“We discovered that using both cameras worked well, but required a whole lot of computing power and might be cost-prohibitive,” researcher Helen Huang said in a news release.

“But we also found that using just the camera mounted on the thoracic worked fairly well — especially for near-term predictions, such as what the terrain would be like for another step or two,” she continued.

While a computer vision system which can forecast what’s ahead of a prosthetic leg wearer could be impressive on its own, the NC State researchers gave their AI an extra skill: it creates a forecast, then calculates its degree of certainty in that prediction and uses that to determine how to adjust its behavior.

“When the amount of uncertainty is too large, the AI is not made to make a questionable decision — it might notify the consumer that it doesn’t have enough confidence in its forecast to behave or default to a’ssafe’ manner,” Zhong said.

The investigators consider this ability to factor in doubt that could make their AI useful for programs far beyond prosthetics.

“We created a better way to teach deep-learning systems how to evaluate and measure uncertainty, in a way that allows the system to incorporate uncertainty into its own decision making,” researcher Edgar Lobaton said. “This is surely relevant for autonomous prosthetics, but our work here could be applied to any deep-learning system.”

If you are Interested In Machine Learning You Can Check Machine Learning Internship Program

Also Check Other technical and Non Technical Internship Programs